will explore more interface apps and plugins to link dev sections like

Blog

-

stackoverflow

stack activity, wish for the world: to be answered all questions <3

https://stackexchange.com/users/1814694/florian-isopp?tab=activity

https://stackoverflow.com/users/1650038/florian-isopp?tab=questions

-

NUnit from scratch

https://github.com/nunit/nunit-vs-templates

same here as below, dont want to install all sorts of things extensions etc to scour through exs

https://github.com/nunit/dotnet-new-nunit

skip this, trust me, it is trash 😉 and not compatible to open.https://docs.microsoft.com/en-us/dotnet/core/testing/unit-testing-with-nunit

folllow up and self contained repo

https://github.com/flowxcode/nunit-template

according MS docs to test test the tests

https://nunit.org/nunitv2/docs/2.6.4/quickStart.html

started to debug, decided to analyse templates and MS description. building scratch project to debug and build up proper architecture

/unit-testing-using-nunit unit-testing-using-nunit.sln /PrimeService Source Files PrimeService.csproj /PrimeService.Tests Test Source Files PrimeService.Tests.csprojhttps://docs.educationsmediagroup.com/unit-testing-csharp/nunit/lifecycle-of-a-test-fixture

Lifecycle of a test fixture

As mentioned before, NUnit gives the developer the possibility to extract all initialization and tear-down code that multiple tests might be sharing into ad-hoc methods.

Developers can take advantage of the following facilities to streamline their fixtures

- A method decorated with a

SetUpattribute will be executed before each test - A method decorated with a

TearDownattribute will be executed after each test - A method decorated with a

OneTimeSetUpattribute will be executed before any test is executed - A method decorated with a

OneTimeTearDownattribute will be executed after all tests have been executed - The class constructor will be executed before any method and can be used to prepare fields that shouldn’t be modified while executing the tests.

Additionally, developers can set up fixtures contained in a namespace and all its children by creating a class decorated with the attribute

SetUpFixture. This class will be able to contain methods decorated withOneTimeSetUpandOneTimeTearDownattributes.NUnit supports multiple

SetUpFixtureclasses: in this case, setup methods will be executed starting from the most external namespace in and the teardown from the most internal namespace out.

nunit References:

https://automationintesting.com/csharp/nunit/lessons/whataretestfixtures.html

- A method decorated with a

-

secure authentication

read the counter of auth attempts:

The INITIALIZE UPDATE command is used, during explicit initiation of a Secure Channel, to transmit card and session data between the card and the host. This command initiates the initiation of a Secure Channel Session.

read the counter of apdu.

findings: counter ist increasing in 2 apdu responses

https://www.rapidtables.com/convert/number/hex-to-decimal.html

https://www.scadacore.com/tools/programming-calculators/online-hex-converter/

-

dmx.

coding plan, visualise first, notes ideas from

-

github pages automation projects

gonna go with 3 gh pages repos, active at the moment. want to automate CV build and manage complex playgrounds and code books

https://flowxcode.github.io/hpage/

-

snipped first #Groovy script for Jenkins

whole Jenkins day today. started with log production and automation, ended in API triggers and log analytics.

groovy script base for Jenkins . ! should be checked into SCCM to to jenkins per code proccess. So dont skip the funny parts and stay stuck halfway through.

checked in some stubs, full project not disclosed

https://github.com/flowxcode/shellexperimental

-

NUnit setup tmr for test setup

things tbd tmr , details tobe provided and worked out

- SetUpAttribute is now used exclusively for per-test setup.

- TearDownAttribute is now used exclusively for per-test teardown.

- OneTimeSetUpAttribute is used for one-time setup per test-run. If you run n tests, this event will only occur once.

- OneTimeTearDownAttribute is used for one-time teardown per test-run. If you run n tests, this event will only occur once

- SetUpFixtureAttribute continues to be used as at before, but with changed method attributes.

-

FactoryPattern adaptions real world

adapt a pattern and build a reald world ex into it

https://github.com/flowxcode/dotnet-design-patterns-samples#factory-method

https://github.com/flowxcode/dotnet-design-patterns-samples/tree/master/Generating/FactoryMethod

- build pattern

- introduce config

- locate point from where to read config in current project

- read config early and compare in diagrams

the factory pattern:

TestFixtureContextBase.cs

DeviceMapper -> gets a new DeviceFactory creates Devices , Devices inherit from Device class. -

TestFixture NUnit Tests floowww flow

call stack directly after Test Class constructor:

TestFixture.Core.TestFixtureContextBase.OneTimeSetup() Line 46 C#

-

.dll Type Library Importer Tlbimp.exe and Tlbexp.exe

wanted to add AwUsbApi.dll lib but got

trying to validate dlls

https://stackoverflow.com/questions/3456758/a-reference-to-the-dll-could-not-be-added

using VS Developer Command Prompt

https://docs.microsoft.com/en-us/visualstudio/ide/reference/command-prompt-powershell?view=vs-2022

C:\Program Files\Digi\AnywhereUSB\Advanced>tlbimp AwUsbApi.dll Microsoft (R) .NET Framework Type Library to Assembly Converter 4.8.3928.0 Copyright (C) Microsoft Corporation. All rights reserved. TlbImp : error TI1002 : The input file 'C:\Program Files\Digi\AnywhereUSB\Advanced\AwUsbApi.dll' is not a valid type library. C:\Program Files\Digi\AnywhereUSB\Advanced>Tlbexp AwUsbApi.dll Microsoft (R) .NET Framework Assembly to Type Library Converter 4.8.3928.0 Copyright (C) Microsoft Corporation. All rights reserved. TlbExp : error TX0000 : Could not load file or assembly 'file:///C:\Program Files\Digi\AnywhereUSB\Advanced\AwUsbApi.dll' or one of its dependencies. The module was expected to contain an assembly manifest.ist not the first problem. already encountered on digi forum

https://www.digi.com/support/forum/27954/problems-with-awusbapi-dll

-

contd REST API set up

extended path on post:

additional base preps:

IIS:

Firewall:

related:

-

string empty or “”

one pretty fundamental answer:

c stackoverflow:

There really is no difference from a performance and code generated standpoint. In performance testing, they went back and forth between which one was faster vs the other, and only by milliseconds.

In looking at the behind the scenes code, you really don’t see any difference either. The only difference is in the IL, which

string.Emptyuse the opcodeldsfldand""uses the opcodeldstr, but that is only becausestring.Emptyis static, and both instructions do the same thing. If you look at the assembly that is produced, it is exactly the same.C# Code

private void Test1() { string test1 = string.Empty; string test11 = test1; } private void Test2() { string test2 = ""; string test22 = test2; }IL Code

.method private hidebysig instance void Test1() cil managed { // Code size 10 (0xa) .maxstack 1 .locals init ([0] string test1, [1] string test11) IL_0000: nop IL_0001: ldsfld string [mscorlib]System.String::Empty IL_0006: stloc.0 IL_0007: ldloc.0 IL_0008: stloc.1 IL_0009: ret } // end of method Form1::Test1.method private hidebysig instance void Test2() cil managed { // Code size 10 (0xa) .maxstack 1 .locals init ([0] string test2, [1] string test22) IL_0000: nop IL_0001: ldstr "" IL_0006: stloc.0 IL_0007: ldloc.0 IL_0008: stloc.1 IL_0009: ret } // end of method Form1::Test2Assembly code

string test1 = string.Empty; 0000003a mov eax,dword ptr ds:[022A102Ch] 0000003f mov dword ptr [ebp-40h],eax string test11 = test1; 00000042 mov eax,dword ptr [ebp-40h] 00000045 mov dword ptr [ebp-44h],eaxstring test2 = ""; 0000003a mov eax,dword ptr ds:[022A202Ch] 00000040 mov dword ptr [ebp-40h],eax string test22 = test2; 00000043 mov eax,dword ptr [ebp-40h] 00000046 mov dword ptr [ebp-44h],eax -

COMException

System.Runtime.InteropServices.COMException (0x800703FA): Retrieving the COM class factory for component with CLSID {82EAAE85-00A5-4FE1-8BA7-8DBBACCC6BEA} failed due to the following error: 800703fa Illegal operation attempted on a registry key that has been marked for deletion. (0x800703FA).

at System.RuntimeTypeHandle.AllocateComObject(Void* pClassFactory)

at System.RuntimeType.CreateInstanceDefaultCtor(Boolean publicOnly, Boolean wrapExceptions)

at System.Activator.CreateInstance(Type type, Boolean nonPublic, Boolean wrapExceptions)

at System.Activator.CreateInstance(Type type)

at …Solution

moved instance into ctor

public KeoObj() { _proxiLab = (IProxiLAB)Activator.CreateInstance(Type.GetTypeFromProgID("KEOLABS.ProxiLAB")); }always a charm, clean code:

possible relevance:

link base:

http://adopenstatic.com/cs/blogs/ken/archive/2008/01/29/15759.aspx

-

the AMR command

section 4.5.1, APPLICATION MANAGEMENT REQUEST (AMR) Command

https://globalplatform.org/wp-content/uploads/2014/03/GPC_ISO_Framework_v1.0.pdf

ideas tear and check and repeat.

run multiple parameterized tests with NUnit as in:

https://www.lambdatest.com/blog/nunit-parameterized-test-examples/

[Test] [TestCase("chrome", "72.0", "Windows 10")] [TestCase("internet explorer", "11.0", "Windows 10")] [TestCase("Safari", "11.0", "macOS High Sierra")] [TestCase("MicrosoftEdge", "18.0", "Windows 10")] [Parallelizable(ParallelScope.All)] public void DuckDuckGo_TestCase_Demo(String browser, String version, String os) { String username = "user-name"; String accesskey = "access-key"; String gridURL = "@hub.lambdatest.com/wd/hub"; -

set up ASP.NET API IIS path.

- Add Website and created folder eg . c:\iis\myapi

- Change Application Pool to No Managed Code

- Check Security of Folder, add

- IIS_IUSRS

- IIS AppPool\myapi

- Browse Created Website (should result in HTTP Error 403.14 – Forbidden to list contents cause nothing is in)

- Create new repo eg github

- Create new VisualStudio ASP.NET Core API

- Run and Debug

- try api endpoints

- publish to target Folder (best case: separate folder to copy after publish)

- Copy contents (using TeraCopy eg , resp. diff sync tool)

- try sampleendpoint

- take care that Swagger is not configured to show in default so dont despair

- commit your state if working

- checking git status is everything is correctly tracked.

- rename WeatherForecast to dream name best with eg. Notepad++ replace all and change files names in VS later on.

- test run and debug

- publish again

- test it on local IIS.

works till here. so integrate biz logic and repeat small steps cycle step by step.

sources of error investigation:

custom err exc handling

API functional, but problems showing swagger site. steps:

- had to check security of the COM object:

due to Event Viewer error desc

Category: Microsoft.AspNetCore.Server.IIS.Core.IISHttpServer EventId: 2 SpanId: cb7174b3e1054a29 TraceId: 789b2e9b100bdb4d3fe6ed8e154135f1 ParentId: 0000000000000000 RequestId: 80000006-0007-ff00-b63f-84710c7967bb RequestPath: /Keo/CreateKeoObj Connection ID "18374686511347007489", Request ID "80000006-0007-ff00-b63f-84710c7967bb": An unhandled exception was thrown by the application. Exception: System.UnauthorizedAccessException: Retrieving the COM class factory for component with CLSID {82EAAE85-00A5-4FE1-8BA7-8DBBACCC6BEA} failed due to the following error: 80070005 Access is denied. (0x80070005 (E_ACCESSDENIED)). at System.RuntimeTypeHandle.AllocateComObject(Void* pClassFactory) at System.RuntimeType.CreateInstanceDefaultCtor(Boolean publicOnly, Boolean wrapExceptions) at System.Activator.CreateInstance(Type type, Boolean nonPublic, Boolean wrapExceptions) at System.Activator.CreateInstance(Type type) at KeoCoreApi.KeoObj.CreateKeoInstance() in C:\git\keolabs\KeoCoreApi\KeoObj.cs:line 18 at lambda_method4(Closure , Object , Object[] ) at Microsoft.AspNetCore.Mvc.Infrastructure.ActionMethodExecutor.SyncObjectResultExecutor.Execute(IActionResultTypeMapper mapper, ObjectMethodExecutor executor, Object controller, Object[] arguments) at Microsoft.AspNetCore.Mvc.Infrastructure.ControllerActionInvoker.InvokeActionMethodAsync() at Microsoft.AspNetCore.Mvc.Infrastructure.ControllerActionInvoker.Next(State& next, Scope& scope, Object& state, Boolean& isCompleted) at Microsoft.AspNetCore.Mvc.Infrastructure.ControllerActionInvoker.InvokeNextActionFilterAsync() --- End of stack trace from previous location --- at Microsoft.AspNetCore.Mvc.Infrastructure.ControllerActionInvoker.Rethrow(ActionExecutedContextSealed context) at Microsoft.AspNetCore.Mvc.Infrastructure.ControllerActionInvoker.Next(State& next, Scope& scope, Object& state, Boolean& isCompleted) at Microsoft.AspNetCore.Mvc.Infrastructure.ControllerActionInvoker.InvokeInnerFilterAsync() --- End of stack trace from previous location --- at Microsoft.AspNetCore.Mvc.Infrastructure.ResourceInvoker.<InvokeFilterPipelineAsync>g__Awaited|20_0(ResourceInvoker invoker, Task lastTask, State next, Scope scope, Object state, Boolean isCompleted) at Microsoft.AspNetCore.Mvc.Infrastructure.ResourceInvoker.<InvokeAsync>g__Awaited|17_0(ResourceInvoker invoker, Task task, IDisposable scope) at Microsoft.AspNetCore.Mvc.Infrastructure.ResourceInvoker.<InvokeAsync>g__Awaited|17_0(ResourceInvoker invoker, Task task, IDisposable scope) at Microsoft.AspNetCore.Routing.EndpointMiddleware.<Invoke>g__AwaitRequestTask|6_0(Endpoint endpoint, Task requestTask, ILogger logger) at Microsoft.AspNetCore.Authorization.AuthorizationMiddleware.Invoke(HttpContext context) at Swashbuckle.AspNetCore.SwaggerUI.SwaggerUIMiddleware.Invoke(HttpContext httpContext) at Swashbuckle.AspNetCore.Swagger.SwaggerMiddleware.Invoke(HttpContext httpContext, ISwaggerProvider swaggerProvider) at Microsoft.AspNetCore.Server.IIS.Core.IISHttpContextOfT`1.ProcessRequestAsync()Next steps if resolved: test from different computer, intranet:

- connect to ip host and port

- if connection is not working through, check firewall rules.

- go to advanced settings

- open up required port by adding Inbout Rule Port

- test api from network computer

- add /swagger , respectively the desired endpoint to url

.

-

share IIS site o API on local intranet win10 11

https://docs.microsoft.com/en-us/iis/get-started/getting-started-with-iis/create-a-web-site

https://superuser.com/a/263893/237029

open firewall ports for spec site cause only port 80 default website is open std.

always start first with an easy landing page and add api or biz domain functions later on. step by step, eliminate possible error sources..

-

Logging in ASP.NET Web API Pitfalls

First of all: Use a LogViewer eg LogViewPlus or lightweight LogViewer of uvviewsoft com. Makes your life a lot easier.

Pitfall1: logfiles getting too big.

-

semi dependant API calls sequence in REST realm

https://www.quora.com/What-does-state-mean-in-Representational-State-Transfer-REST

The fundamental explanation is:

No client session state on the server.but this time we introduce builder.Services.AddSingleton<XObj>();

from my observation, I put activated the Init function after tearing cause the PICC time out occured afterwards. Tcl: //ERROR (code 5): PICC response timed out.

Tcl transmissions worked again after init protocols

err = TransmitTearing(txBuffer, out rxBuffer); initResult = SendActivationHttpRequest().GetAwaiter().GetResult(); -

tearing smartcard RF power

tearing power from cards during command execution. Keolabs SPulse option triggered by Command PCD_EOF triggers RF_POWER output, cycles parameter to align tearing moment.

keo.Spulse.LoadSpulseCsvFile(filepath, fdt, (uint)eFrameTypeFormat.FRAME_TYPE_SPULSE, (uint)eEmulatorLoadSpulseMode.STAND_ALONE); keo.Spulse.EnableSpulse((uint)eEmulatorSpulseEvent.SP_PCD_EOF, (uint)eEmulatorSpulseOutput.SP_RF_POWER); keo.Reader.ISO14443.SendTclCommand(0x00, 0x00, ref txBuffer[0], (uint)txBuffer.Length, out rxBuffer[0], (uint)rxBuffer.Length, out rxBufferLength); -

how to move smartcard states ISO IEC 14443-3

idle active selected

uid

cid

-

cryptography

moving keysets.. AES DES

"Keyset_48_AES": "", "Keyset_00_DES": "", "Keyset_FF_DES": "",

AES round function (c wiki) -

vs code and ProxiLAB

trying SCRIPTIS but debugger is flaky so VS Code got the shot once again.

error = ProxiLAB.Reader.ISO14443.SendTclCommand(0x00, 0x00, TxBuffer, RxBuffer) if (error[0]): print("Tcl: {0}".format(ProxiLAB.GetErrorInfo(error[0]))) PopMsg += "Tcl: {0}".format(ProxiLAB.GetErrorInfo(error[0])) + "\n" else: print("Tcl response: " + ''.join(["0x%02X " % x for x in RxBuffer.value])) PopMsg += "Tcl response: " + ''.join(["0x%02X " % x for x in RxBuffer.value]) + "\n"Tcl response: 0x6A 0x86

-

keolabs ProxiLAB Quest

doing some #python tests GetCard SendCommand for ISO/IEC 14443 smartcards.

- python run and trace in Quest software as well as RGPA software. disadvantage missing debugging comf

- so moved to VS Code with python and Keolabs lib python file

- searching for implementation file and possible dlls for c# integration

- Poller0 PCD proximity coupling device and PICC

- challenge is to set up full python setup outside of delivered Quest. API functions full details?

https://diglib.tugraz.at/download.php?id=5f588b91684cf&location=browse

def inventory_iso14443A(self): """ By sending a 0xA0 command to the EVM module, the module will carry out the whole ISO14443 anti-collision procedure and return the tags found. >>> Req type A (0x26) <<< ATQA (0x04 0x00) >>> Select all (0x93, 0x20) <<< UID + BCC """ response = self.issue_evm_command(cmd='A0') for itm in response: iba = bytearray.fromhex(itm) # Assume 4-byte UID + 1 byte Block Check Character (BCC) if len(iba) != 5: logger.warn('Encountered tag with UID of unknown length') continue if iba[0] ^ iba[1] ^ iba[2] ^ iba[3] ^ iba[4] != 0: logger.warn('BCC check failed for tag') continue uid = itm[:8] # hex string, so each byte is two chars logger.debug('Found tag: %s (%s) ', uid, itm[8:]) yield uid # See https://github.com/nfc-tools/libnfc/blob/master/examples/nfc-anticol.c -

cache hacks

compiler explorer:

https://godbolt.org/z/kANkNLvoid maccess(void *p) { asm volatile("movq (%0), %%rax\n" : : "c"(p) : "rax"); }moves quadword from mem adress into rax register

AT&T syntax?shm_open and mmap:

quote: mmap works in multiples of the page size on your system. If you’re doing this on i386/amd64 or actually most modern CPUs, this will be 4096.

In the man page of mmap on my system it says: “offset must be a multiple of the page size as returned by sysconf(_SC_PAGE_SIZE).”. On some systems for historical reasons the length argument may be not a multiple of page size, but mmap will round up to a full page in that case anyway.

-

compiler life

src/test/resources/public/input/lexer/fail01.jova

-

gradle and antlr for compiler set ups

Commands:

./gradlew compileJava

./gradlew compileTestJava

./gradlew printTree -PfileName=PATH_TO_JOVA_FILE

./gradlew clean./gradlew compileJava && ./gradlew compileTestJava && ./gradlew printTree -PfileName=PATH_TO_JOVA_FILE && ./gradlew clean./gradlew printTree -PfileName=main/antlr/at/tugraz/ist/cc/Calc.g4gradlew permission denied

https://www.cloudhadoop.com/gradlew-permission-denied/src/test/resources/public/input/lexer -

MPC TTP SPDZ

spdz online phase

https://bristolcrypto.blogspot.com/2016/10/what-is-spdz-part-1-mpc-circuit.html

https://bristolcrypto.blogspot.com/2016/10/what-is-spdz-part-2-circuit-evaluation.html

https://bristolcrypto.blogspot.com/2016/11/what-is-spdz-part-3-spdz-specifics.htmloblivious transfer

https://crypto.stanford.edu/pbc/notes/crypto/ot.html

(c) tug

-

homomorphic encryption

https://www.techtarget.com/searchsecurity/definition/homomorphic-encryption

quote: “

Homomorphic encryption is the conversion of data into ciphertext that can be analyzed and worked with as if it were still in its original form.

Homomorphic encryptions allow complex mathematical operations to be performed on encrypted data without compromising the encryption. In mathematics, homomorphic describes the transformation of one data set into another while preserving relationships between elements in both sets. The term is derived from the Greek words for “same structure.” Because the data in a homomorphic encryption scheme retains the same structure, identical mathematical operations — whether they are performed on encrypted or decrypted data — will yield equivalent results.

Homomorphic encryption is expected to play an important part in cloud computing, allowing companies to store encrypted data in a public cloud and take advantage of the cloud provider’s analytic services.

Here is a very simple example of how a homomorphic encryption scheme might work in cloud computing:

- Business XYZ has a very important data set (VIDS) that consists of the numbers 5 and 10. To encrypt the data set, Business XYZ multiplies each element in the set by 2, creating a new set whose members are 10 and 20.

- Business XYZ sends the encrypted VIDS set to the cloud for safe storage. A few months later, the government contacts Business XYZ and requests the sum of VIDS elements.

- Business XYZ is very busy, so it asks the cloud provider to perform the operation. The cloud provider, who only has access to the encrypted data set, finds the sum of 10 + 20 and returns the answer 30.

- Business XYZ decrypts the cloud provider’s reply and provides the government with the decrypted answer, 15.

“

there is a python lib PySEAL

https://gab41.lab41.org/pyseal-homomorphic-encryption-in-a-user-friendly-python-package-e27547a0b62f

https://blog.openmined.org/build-an-homomorphic-encryption-scheme-from-scratch-with-python/

https://bit-ml.github.io/blog/post/homomorphic-encryption-toy-implementation-in-python/

-

Private Information Retrieval

Additive Secret Sharing

Since all shares (except for one) are chosen randomly, every share is indistinguishable from a random

value and no one can learn anything about a by observing at most n − 1 shares.Shamir Secret Sharing

Drawback of additive secret sharing is that parties can drop out and fail to provide their share.

–

For both sharing methods, holders of the secret shares can compute linear functions on their shares.

PrivaGram

encode index of the chosen image in a bit string using one-hot encoding

XORadding robustness

Shamir secret sharing instead of additive secret sharing

- robust against server dropping out, k-out-of-l PIR

- at least t+1 servers are required to reconstruct the secret., t-private-l-server PIR

t-private k-out-of-l PIR protocol

adding homomorphic encryption

collude

final protocol

tbd

-

Secure Classification

Secure Multiparty Computation like Yao’s Millionaires’ Problem [Yao82]

SPDZ

http://bristolcrypto.blogspot.com/2016/10/what-is-spdz-part-1-mpc-circuit.htmlA secret value x is shared amongst n parties, such that the sum of all shares are equal to x.

- uniformly at random

adding sec

- in SPDZ MACs are used to authenticate the shares.

- global MAC key

- each party knows a share of the global MAC key

sharing an input value

- sharing masked version of x

- each party computes <x>

next:

opening a value

partially

output

directional output

MAC check protocol

coin tossing protocol

commitments -

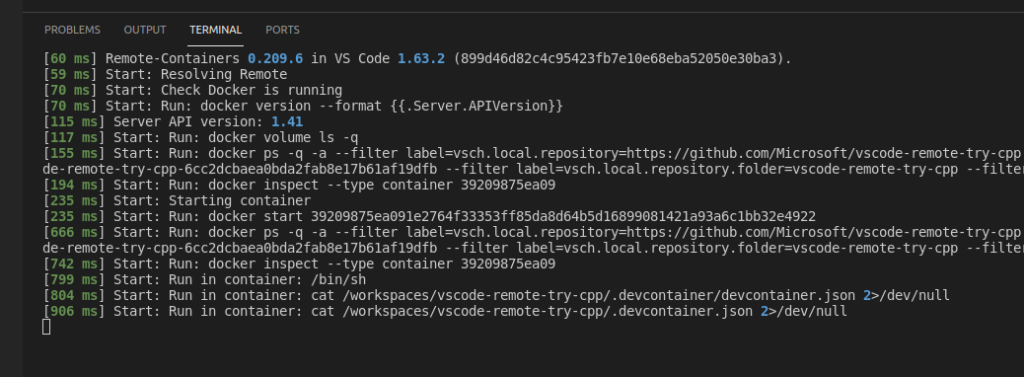

c++ in docker and VS Code

https://code.visualstudio.com/docs/remote/containers

https://code.visualstudio.com/docs/remote/containers-tutorial

g++ main.cpp -o main.out

mkdir build && cd build && cmake .. && make test

cd build && cmake .. && make test

cmake .. && make test

docker container ls –all

docker exec -it quizzical_banach /bin/bashdocker ps -a

docker start NAMEdocker container ls // running

// visual studio notifies if to reopen the workspace in container…

apt list –installed

-

Boston Housing Data Analysis

API

https://www.tensorflow.org/api_docs/python/tf/keras/datasets/boston_housing/load_dataSamples contain 13 attributes of houses at different locations around the Boston suburbs in the late 1970s. Targets are the median values of the houses at a location (in k$).

http://lib.stat.cmu.edu/datasets/boston

404×13 = 5252

y_train x_train 404 samples

y_test x_test 102 samples

x 13

y 1 target scalary_train, y_test: numpy arrays of shape

(num_samples,)containing the target scalars. The targets are float scalars typically between 10 and 50 that represent the home prices in k$.